Producing Statistical Analysis Regarding Severe Accident Uncertainty at Nuclear Power Plants

By Nick Karancevic, Senior Nuclear Engineer, Fauske & Associates

The Three Mile Island Severe Accident occurred in 1979, near Harrisburg, Pennsylvania. In 1986, the Chernobyl Criticality Accident occurred. In 1986, what is widely considered as the worst commercial power reactor accident occurred at Chernobyl Power Station. In 1988, the US Nuclear Regulatory Commission issued Generic Letter 88-20, |

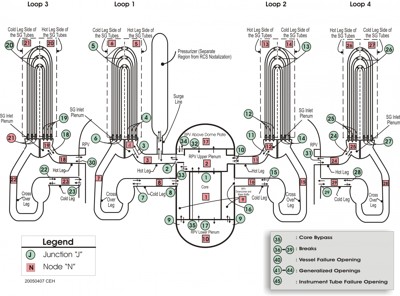

| MAAP5 Primary System Nodalization Scheme |

"Individual Plant Examination for Severe Accident Vulnerabilities". These events prompted a development of computer codes that could accurately simulate Severe Accidents, such as Three Mile Island, for accident management evaluations and prompted the development of Severe Accident Management Guidelines (SAMG). But, what kind of computing power was available back then? This would in part guide the future definition of “accurate simulation”. And more relevant, what kind of computing power is available now?

I can recall vividly when one of my friends stated there would soon be a 1000 MHz CPU available. We were all in disbelief and shock. The year was 2000, and such things were unheard of. Fast forward to the year 2018, and I find myself writing a business case to purchase a single server that will sit on somebody’s desk, with 112 CPU cores which in the grand scheme of things is really not that many CPUs compared to the power harnessed at the United States national laboratories.

A 2003 European Commission study noted that “core damage frequencies of 5 × 10-5 [per reactor-year] are a common result” or in other words, one severe accident would be expected every 20,000 reactor years. From this value, assuming there are 500 reactors in use in the world, one core damage incident would be expected to occur every 40 years. Ominously, the Fukushima Daiichi nuclear disaster occurred in the year 2011.

After the Fukushima Severe Accident, it was now time to revisit the severe accident nuclear codes. A lot of research and wisdom has resulted from the Three Mile Island accident; however, a lot more remained unknown. For example, what are the specific combinations of criteria needed for the nuclear fuel pins to fail? Now that we have more computing speed available, can we run a more detailed simulation, with more nodes, and more physics models, and still expect an answer overnight, if not a few hours.

Most importantly, we may never know the exact criteria for nuclear fuel pin failure, or the best correlation of the two excellent candidates that should be used for some key phenomena. We may be able to tame the numerical uncertainty of complex engineering calculations, but we will never fully mitigate it with one hundred percent certainty. For these reasons, the solution stands out: why not simulate the same accident scenario using all of the available state of the art correlations, and a representative range of key parameters to get the best possible scenario? Statistical sampling and analysis tools can help the severe accident analyst to say, “not only is the best case  simulation showing this result, but an uncertainty and sensitivity analysis shows the same”. In the year 2009, the NRC issued NUREG-1855, Volume 1, “Guidance on the Treatment of Uncertainties Associated with PRAs in Risk-Informed Decision Making”. Why not run a sensitivity and uncertainty analysis to determine how much time a nuclear reactor operator has to complete some action that will prevent core damage? I cannot envision a world where uncertainty and sensitivity analysis don’t become mainstream requirements of nuclear analysis, complementing other data analytics of today.

simulation showing this result, but an uncertainty and sensitivity analysis shows the same”. In the year 2009, the NRC issued NUREG-1855, Volume 1, “Guidance on the Treatment of Uncertainties Associated with PRAs in Risk-Informed Decision Making”. Why not run a sensitivity and uncertainty analysis to determine how much time a nuclear reactor operator has to complete some action that will prevent core damage? I cannot envision a world where uncertainty and sensitivity analysis don’t become mainstream requirements of nuclear analysis, complementing other data analytics of today.

There is much to share on sensitivity and uncertainty analysis for Severe Accident mitigation. Continue to see content like this by subscribing our blog below.